Humans are excellent at working together: we practice cooperation when we play and use it when we work. Collaboration is essential, from construction sites to performing complex surgeries. This is because the human ability to read the motor intentions of another human and adequately react is unparalleled. Two skilled technicians don’t need much instruction to know how to hold up an element that the other one is welding; a nurse does not need much guidance when feeding her patient. However, collaboration between humans and robots is still more than inferior to that between humans themselves. The idea of human-robot collaboration is not new. Collaborative robots (cobots) have been in development since at least two decades, although any substantial breakthrough in this domains seems extremely limited by factors of safety and naturalistic aspects of collaboration.

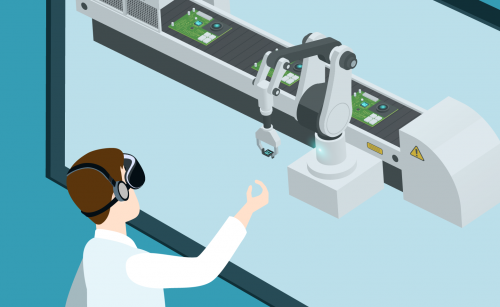

At Proaction Lab we seek to change this current stalemate and allow humans and their robotic partners to be able to work together, side by side. We aim to ensure that this collaboration feels safe and natural for humans. Together with our partners from the Institute of Systems and Robotics, we combine neuroscience with robotics to create an environment for developing adaptive, smart cobots by linking them with human brain activity. Our main goal is to use the outstanding ability of the human brain to recognize actions in order to construct adaptive control systems for cobots (and possibly other devices). For this purpose, we use brain-machine interface (BMI) approach to record human brain responses during collaboration with robots.

In collaboration with the University of Madeira, we are working on another aspect of this project: the naturalness of manual collaboration between humans and different types of robots. One example is C3PO and R2D2, the two robotic protagonists from the “Star Wars” movie saga. The former is human-like and we expect it to behave human. Yet, his friend is as far from a human being as possible, having no hands to shake with and no face to put a smile on. How do humans cooperate with robots that do not resemble a human being? Are we made to more intuitively cooperate with robots similar to humans, because our brains are hardwired to collaborate with other humans? We want to answer these questions. Thanks to the use of virtual reality, we can simulate any sort of robots, from the currently available industrial robots such as Baxter, to completely imaginative robots without physical joints. Using virtual reality allows us to achieve one more benefit: we can test any scenario without putting humans at risk of injury.